Noisebridge went to the Maker Faire: In this article you will learn about NGALAC — the…

Noisebridge is a San Francisco-based makerspace — a member owned collective — where people collaborate on cool projects, make things, host…

Noisebridge is a San Francisco-based makerspace — a member owned collective — where people collaborate on cool projects, make things, host and teach classes, train each other in programming, math, laser cutting, woodworking, metalworking, sewing, 3D printing, passing coding interviews, creating music, repairing electronics, circuit hacking, build video games, virtual reality applications, and much more.

This article was written by Micah Blumberg and edited by Bernice Anne W. Chua

Micah is a Journalist, Analyst Researcher, and a Neurohacker.

Bernice is an Indie Videogame Developer and Software Engineer

I want to tell you why I love Noisebridge in San Francisco, and why you should consider joining, coming here to learn, coming here to participate in projects, coming here to teach science & technology, and also why you should consider donating to keep Noisebridge going.

Noisebridge is a non-profit educational maker space. It’s a place to come and learn and make things. The people at Noisebridge all help each other learn, they teach each other classes, and everyone works on projects. Folks can come basically anytime during open hours during the day. In addition Noisebridge is also a club with a membership, if folks want to be paying members there are additional responsibilities and additional privileges for members.

I first started coming to Noisebridge in November of 2017, and little by little I have been getting to know the people who are members here like Mark Wilson who runs the Gaming Archivists meetup at Noisebridge, it was Mark’s dream to build an arcade machine simulator. Someone else had the idea to make it stream to Twitch and YouTube Live. Thus the collaboration known as NGALAC (Noisebridge Gaming Archivists Livestreaming Arcade Cabinet) began.

Editor’s Note (Bernice Anne W. Chua writes: About NGALAC)

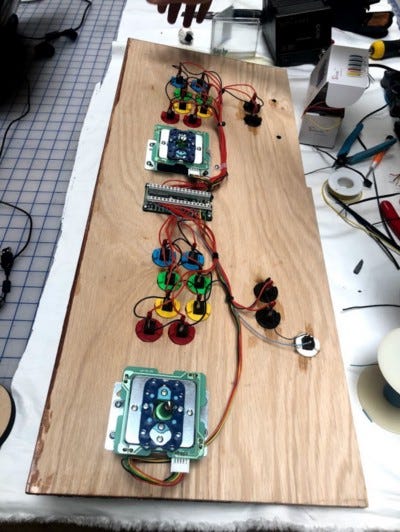

“Noisebridge Gaming Archivists Livestreaming Arcade Cabinet, shortened to NGALAC, was created by using the Internet Archive’s emulated games from classic consoles, and using a Raspberry Pi with Emulation Station to run the game emulations. The games are captured by an Elgato capture card, to send to the livestreaming PC, which uses Open Broadcast Studio (OBS) to broadcast the livestreamed games to restream.io, which broadcasts everything to streaming services such as Twitch and Youtube Live.

Our streams can be found here:

https://gaming.youtube.com/channel/UCjDvPM0uHcvx9jJA5gnM-ng/live

and

https://www.twitch.tv/noisebridge

And more information about NGALAC can be found here:

https://www.noisebridge.net/wiki/NGALAC ”

Thanks Bernice.

Please watch a short video where I interviewed some of the folks who created the NGALAC on May 8th 2018 https://www.facebook.com/worksalt/videos/2213422328684402/

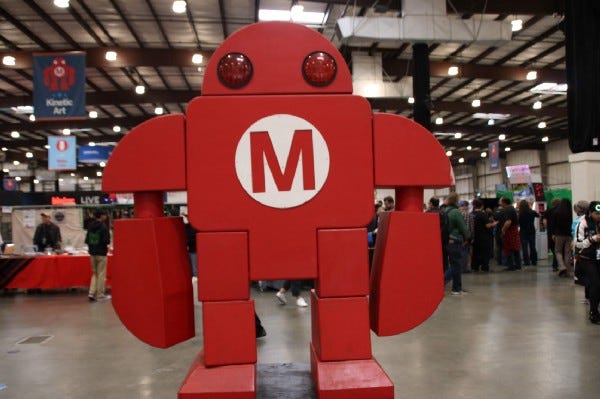

Shortly thereafter I went to the Maker Faire (I think on May 20th 2018) to see NGALAC being demoed and I quickly recorded this short video in which we got to see the NGALAC in action at the legendary Maker Faire. https://youtu.be/HuHgt1IhPT4

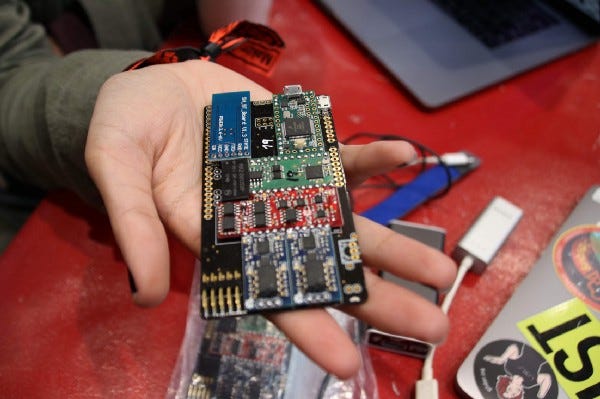

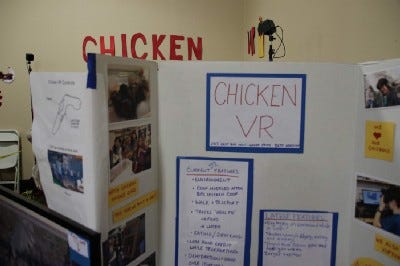

Noisebridge also showcased other projects during Maker Faire: there was a photogrammetry-based model of Noisebridge running in Virtual Reality for people who have never been to Noisebridge to experience being in Noisebridge; as well as the Brainduino EEG system, which displayed the brainwaves of its users. The original plan had been to integrate the Brainduino EEG with the NGALAC, but we didn’t pull it off in time for the Maker Faire.

During the filming of that video I met the maker of the Brainduino EEG system. Masahiro Kahata http://www.psychiclab.net/IBVA/Profile.html

About us

Edit descriptionwww.psychiclab.net

It was then during the interview that I realized why the Brainduino was special. Its claim to fame is special noise cancellation features and improved signal processing that sets it apart from other consumer grade EEG devices.

Prior to this I had been organizing a meetup called NeurotechSF since February 2018 and we had been looking to combine VR with EEG and AI. My first pitch to the NeurotechSF group was to combine the OpenBCI with the HTC Vive. However I had been given an Oculus Go at F8 for attending the event (on May 1st 2018, and now there was this new cool EEG device that had improved noise cancellation and improved signal quality, so I decided to ask the DreamTeam if the Friday meetup that I was organizing could borrow their Brainduino device to work on connecting EEG to VR.

NeurotechSF is a part of a global organization of Neurohacker Meetups called NeurotechX with 21 meetup chapters worldwide.

Just to clarify there is a DreamTeam Neuro-hackers group that meets Wednesday at Noisebridge between 8 and 10, and a totally separate group that I organize that’s called NeurotechSF + SF VR that is every Friday between 8pm and 10pm. Even though we are two separate groups we have been working on the same project (that I call the Neurohaxor Project) since June 29th 2018.

We actually accomplished several of our goals. We were able to bring the EEG signals into WebVR and then later we were able to bring the live EEG signals into WebVR and into the Oculus Go at the same time. We are able to take the raw voltages from the contact points on the skin, bring them into the Brainduino as an array of numbers, bring them into a Python server and define a channel 0 and a channel 1, the Python server mostly written by the Wednesday Dreamteam if I remember correctly, and then into our HTML page that included the WebVR API called Aframe.io by Mozilla (this part featured a ton of effort by a lot of people who have visited the NeurotechSF and SF VR meetups at Noisebridge, but the DreamTeam also helped, but I think there focus has been and mostly still is on the server side, and we are very grateful for their support and help!) We eventually decided to define a websocket to send a live stream of data to the webpage, because we needed a stream of data and not a static single serving of eeg data, and in the webpage we separated that websocket array into two channels, and each channel has been set to modify either the x, y, or z position value of our webvr objects. The shapes in WebVR will move automatically when the sensors are live, and the typical result people see is that the frequency of movement seems to stabilize or slowdown when all three sensors are in contact with human skin.

On July 29th I shared the following video, it was viewed 7.9k times “Progress! On the NeurotechSF + SF VR Hacknight #3 we made major progress towards the goal of integrating EEG with WebXR, and VR via an Oculus Go. As you can see in the video the actual voltages from the sensors are causing the shapes in our A-Frame application to run! This took a lot of work and time over many meetups to figure out! Thank you to everyone who has contributed. I feel like this was a significant milestone! Join our discord at http://neurohaxor.com”

https://www.facebook.com/worksalt/videos/2332211350138832/

Then August 22nd We were able to bring the WebVR experience into the Oculus Go VR Headset. I shared this on Facebook at the time: “We did it! Kevin is the first person in the world to see reflections of his brainwave patterns in an Oculus Go at 9:10pm PT Wednesday August 22nd. We connected an brainduino eeg system to an Oculus Go with WebVR! https://www.facebook.com/100000499704585/posts/2378028395557127/

Here is also a video that goes with that https://www.facebook.com/worksalt/videos/2378065355553431/

As of October 10th 2018 the project now has FFT (Fast Fourier Transform) built into it, as well as the technology to display a time series, and the ability to separate out component wave frequencies like Gamma, Alpha, Beta, Theta, and Delta.

We have also created a time stamp for the EEG, and we have created a time stamp for when a user looks at an object (meaning the center of their gaze, which is marketed by a tiny circle, sends a ray to the nearest object and reports its identity with the time stamp.)

We are creating time stamped user data and time stamped EEG data because we want to send this data to a database, perhaps a spreadsheet, and then use it to train our neural network which may end up being pytorch, tensorflow, or perhaps some variant of autoML such as Baidu’s EZDL. After our neural network is trained we are going to ask it to predict what the user is doing based on only brainwave data.

As of Oct 10th 2018 We have transitioned from our first server that was built in Python to a new server written in Go (written by Kevin from the Dreamteam of Neurohackers that meets Wednesday at Noisebridge between 8pm and 10) within a couple weeks we will have this running on a Raspberry Pi Zero W instead of the big Debian Linux desktop workstation that we have been using thus far, and also we may be getting a Mac Mini to run it on also.

After this is accomplished sometime in the next few weeks, I think we will return to building support for the NGALAC Project, because originally we were supposed to record EEG data while people played on the NGALAC arcade cabinet, and show their EEG data on Twitch and YouTube Live, and I think on top of that we could create a virtual NGALAC in exactly the same physical space as the real NGALAC to increase the sense of immersion while people play a real arcade cabinet in reality and in VR at the same time. I told Mark Wilson about this idea and he laughed. He pointed out that it’s kinda pointless but that’s exactly why he likes that idea. So we may just do it.

Since the community helped build this code we have shared the code on our GitHub, https://github.com/micah1/neurotech so you can actually use this code to build your own EEG/WebVR/OculusGO business if you wanted to. But if you make it rich, I would encourage you to donate to Noisebridge, and to the NeurotechSF group and to SF VR, and to the Wednesday DreamTeam Neurohackers so we can continue to create things in this space, and so we can acquire new equipment to hack on.

Micah1/neurotech

Contribute to Micah1/neurotech development by creating an account on GitHub.github.com

Note that Neurohaxor (the BCI to WebVR project I spoke of above) also involves a Raspberry Pi (to host a server written in GO), mySQL, Blockchain (for Privacy) and Neural Networks to train to predict user data from brain data (by training it with both) https://www.meetup.com/NeuroTechSF/

NeuroTechSF

Hacknight: We are playing with things like WebXR, EEG, Oculus GO, Raspberry Pi Zero W, MySQL, Tensorflow, and…www.meetup.com

The photos on this page are re-usable under the following

License: CC Attribution Creative Commons Attribution

Creative Commons - Attribution 4.0 International - CC BY 4.0

Bahasa Indonesia Bahasa Malaysia Castellano (España) Català Dansk Deutsch English Español Esperanto Euskara français…creativecommons.org

“ Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.”